Every industry is racing to prove its “AI-powered” credibility. Press releases repeat the same phrases: “intelligent,” “seamless,” “reduce burden.” The sameness isn’t accidental, it’s the result of algorithmically optimised language, a promotional echo chamber where machine-written narratives begin to outweigh the truth they were meant to describe.

This isn’t just a marketing problem. It’s a knowledge problem. And it cuts to the core of why human involvement in knowledge management has never been more critical.

When Knowledge Becomes Noise

Organisations today face the same vulnerability that cybersecurity vendors revealed: when machines generate both the claims and the evidence, verification collapses.

-

Documents are AI-summarised and re-summarised until their origin is lost.

-

Reports circulate with no human anchor, their authority based on formatting, not substance.

-

Lessons learned blur into lessons hallucinated, repeated across tools until no one can recall the original source.

In this environment, information doesn’t just get lost, it gets contaminated. The danger is not absence of data, but the inability to distinguish between human insight and algorithmic fiction.

Why the Human Matters

Machines can generate documents, summaries, taxonomies, even “insights.” But only humans can:

-

Provide context: Was this decision made under pressure, or was it strategic?

-

Carry intent: Why did we choose this path, and what trade-offs were accepted?

-

Anchor trust: Who can vouch for the validity of this knowledge, and who lived its consequences?

Without human validation, knowledge systems risk becoming self-referential echo chambers: algorithms reinforcing algorithms until the enterprise loses its ability to remember what was real.

Phlow’s Approach: Human in the Loop by Design

At Phlow, we believe AI should amplify human intelligence, not overwrite it. That’s why the Human-in-the-Loop is not an afterthought but the foundation.

-

Tacit to Explicit: Phlow surfaces tacit knowledge from people, conversations, and context, then makes it explicit and reusable. Machines alone cannot do this; it requires human articulation.

-

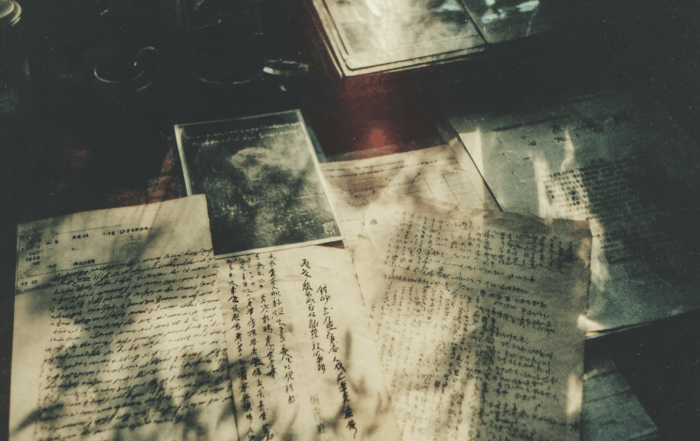

Anchored Attribution: Every piece of knowledge is linked back to its human source. Not just “a document says this,” but “this insight comes from Anna, who solved it last quarter.”

-

Contextual Graphs: Knowledge is not stored as flat files or summaries, but as relationships, between people, projects, and decisions, ensuring that meaning is preserved beyond machine-generated text.

-

Transparency over Automation: Phlow uses AI to structure, connect, and retrieve knowledge, but the final step always brings the human back in. The machine organises; the human validates.

Why This Matters Now

We are entering a phase where enterprises risk outsourcing not just their work but their memory to machines. If the people who own the knowledge are erased from the loop, enterprises will face the same crisis as cybersecurity: unable to distinguish between capability and narrative.

Phlow rejects that future. Our premise is simple:

-

Machines should help us think better, not think for us.

-

Knowledge should be anchored in people, not just in documents.

-

Trust should be earned through transparency, not manufactured through algorithms.

The Future of Knowledge Requires Us

Borges warned of libraries filled with infinite, indistinguishable books. Today, enterprises risk building those libraries themselves, AI-generated, searchable, but devoid of meaning. The only safeguard is to keep humans embedded in the process.

That’s the real promise of Human-in-the-Loop Knowledge Management: not to slow down AI, but to ensure that what we keep, share, and reuse remains true to its origin.

Because knowledge without humans isn’t knowledge at all, it’s just the noise knowledge management it trying to remove.

More Articles

Where HR, R&D, and IT All Care About the Same Thing (But Don’t Know It)

At first glance, HR, R&D, and IT seem to live in entirely different worlds. One is about people, another about innovation, and the third about systems. Their KPIs, budgets, and daily challenges often don’t overlap. [...]

One system, one knowledge to connect them all

If you recognised the loose reference to the “Lord of the Rings”, it means you’re a little bit of a nerd like me. And yes, it’s now cool to be one, not like in the [...]

Human in the Loop: Safeguarding Knowledge in the Age of Algorithmic Echoes

Every industry is racing to prove its “AI-powered” credibility. Press releases repeat the same phrases: “intelligent,” “seamless,” “reduce burden.” The sameness isn’t accidental, it’s the result of algorithmically optimised language, a promotional echo chamber where [...]

When Knowledge Lives Everywhere, You Can’t Trust Anything: Why Enterprises Need a Single Source of Truth

Nothing stalls a team faster than when things go wrong and no one can agree on why. A deadline slips, a project derails, or a customer gets the wrong answer, and suddenly the hunt begins. [...]

When Knowledge Speaks a Different Language, and No One Understands

In global enterprises, knowledge should flow freely across offices, teams, and time zones. In reality, it rarely does. One of the most overlooked reasons? Language. When a company has locations in Tokyo, Milan, São Paulo, [...]

The Problem with Tribal Knowledge

Every single company lose it every day. Most don’t know what to do about it. And very few realise how much it costs them. I’m talking about tribal knowledge. What the Hell is Tribal Knowledge? [...]